Zeiss Helps You In The Comp

Reprinted with permission from Zeiss India. With Special Thanks to DigitalProduction.

Whoever works in comp will sooner or later adjust images – either because something was quickly thrown into disarray on the set and didn’t go as planned, or because a lack of time shattered the dream of obtaining extra data. It is therefore very likely that everyone doing manual adjustments has thought at least once: “Can’t the lens manufacturers just provide some information?”.

One would think that precise distortion and shading data would already be standard – but they are

not. For distortion there are lens grids, for shading only an approximation method. And “doing it quickly” on the set doesn’t always work, since minimal errors can ruin the entire effort. Then Zeiss comes along and says, “We’ll take care of it.”

At FMX, Zeiss introduced the “Cincraft Mapper.” CinCraft Mapper is a service that provides frame-accurate lens distortion and shading data for the post. Everything is based on the shot’s metadata – dispensing with the need for blurred lens grids. We are talking to Marius Jerschke, who is in charge of business development at Mapper and is currently talking to all kinds of artists and studios.

Ever since his father took him to see “Blade Runner,” Marius knew he wanted to work in the film industry. He studied media technology and production at the East Bavarian Technical University in Amberg-Weiden, and after graduating with a bachelor’s degree, he started working directly for a small production company in Munich.

There, as VFX supervisor and art director, he mainly looked after advertising film clients. After seven years, Marius decided to make a switch. Since art and creativity have always been close to his heart, and always having been fascinated by new technologies that have a significant impact on the way filmmakers work, he joined Zeiss in October 2021 as Business and Ecosystem Development Manager for Digital Cinematography.

He works closely with the entire movie team at Zeiss worldwide, trying to launch innovative ideas for VFX under the CinCraft name.

DP: Hello Marius! First of all: What triggered the development of CinCraft?

Marius Jerschke: Digitization and the strong increase in VFX in the film industry are naturally also a concern for a leading lens manufacturer like Zeiss. We develop and build cinema lenses. That means we are familiar with everything that makes up the look of our lenses. So why not make these characteristics available for digital applications as well?

That is how the idea came about to provide digital or virtual images in addition to the physical lenses. That’s why we launched the CinCraft ecosystem and platform, which will support VFX and, in the future, other areas such as virtual production.

CinCraft Mapper is our small but fine solution in this universe. Ultimately, we would like to make all lens characteristics available at some point. For now, however, we will start with the two important

properties, namely distortion and shading, i.e. the distortion and the vignetting of the lens. These two are extremely important for compositing, and distortion is also interesting for match moving.

DP: This may be an absolute hack question, but shouldn’t it be called “CineCraft”?

Marius Jerschke: CC are our boss’s initials. No, jokes aside! Out of dozens of ideas, we soon found the combination of “Cinema” and “Craft” (meaning trade, skill, dexterity) appealing. We then looked at various combinations and stuck with CinCraft as the artificial word. We simply liked it best (laughs).

DP: And what exactly does the mapper do?

Marius Jerschke: To better explain this, let me briefly expand a bit. In 2017, when we introduced our CP.3 lenses, we also

introduced “eXtended Data,” our technology that provides all the important lens metadata for the post. The goal back then was to make it much easier for VFX artists to apply the look of our lenses. eXtended Data builds on Cookies “/i log” and adds two more data sets, namely distortion and shading.

With a tailored post workflow, you could easily adjust distortion and shading in the shot footage and apply it to CGI. The limitation was, or rather is, that with eXtended Data you can only reap the benefits with the corresponding smart lenses so far.

With CinCraft Mapper, our goal is to use optics to design data to provide distortion and shading data for all of our lenses. This now works independently of the system, as you can install the mapper anywhere or integrate it directly into any post workflow via our Command Line version. In addition, the mapper delivers

all data frame-accurately, that is, data for every single frame and every lens setting. And this constitutes one of the crucial differences to the classic lens evaluation process or lens grid workflow.

These lens grids (checkerboard patterns) are filmed at a certain distance to determine the distortion of a lens. But after a shot, you first have a focus distance. And it is known that the focus distance influences the distortion.

It is therefore necessary to repeat this process several times with other focus distances in order to obtain the most accurate data possible, between which one can interpolate. So you can see that this process is very time consuming. And if the image is blurred or crooked, it will only lead to inaccurate and/or useless data. In summary, this process is time consuming, error prone, analog and unrewarding.

Moreover, for shading data, a different approach would still have to be taken, which usually ends up in a “by eye” adjustment. In the rarest of cases, the grey cards process is used here, in which a shot is taken of a well- illuminated white surface so that shading can be seen, which can then be recreated. So again, manual and analog.

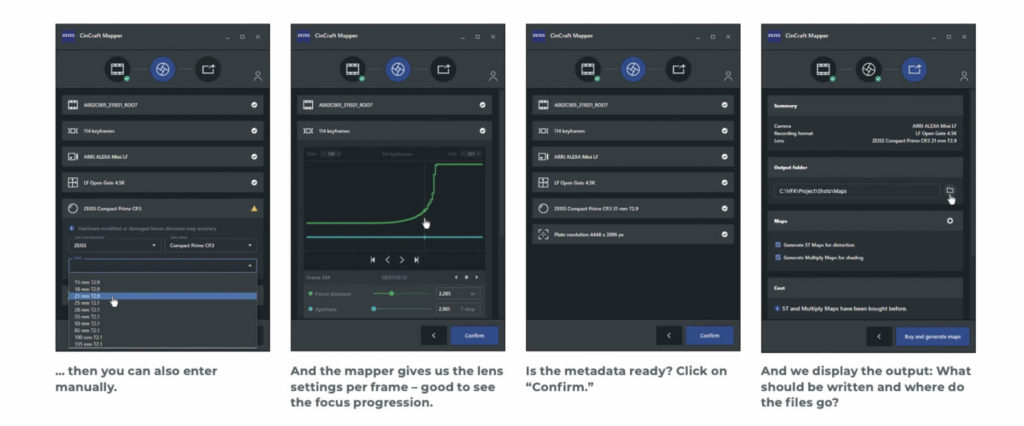

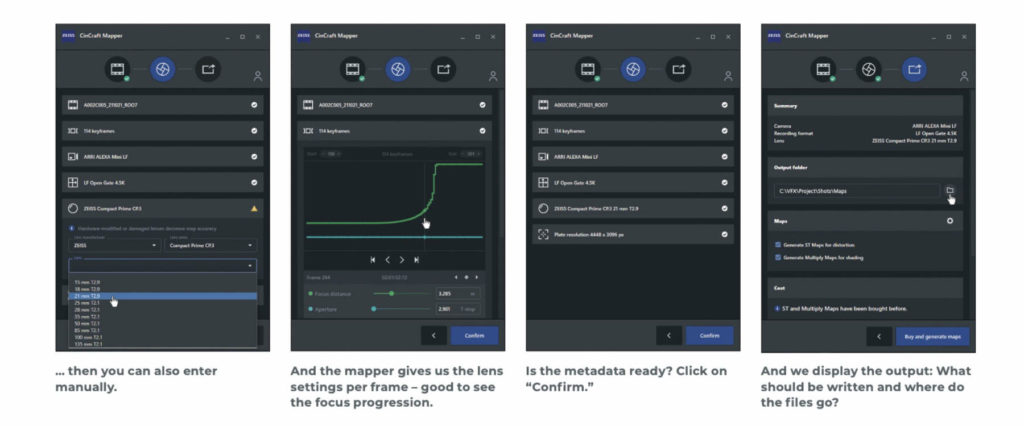

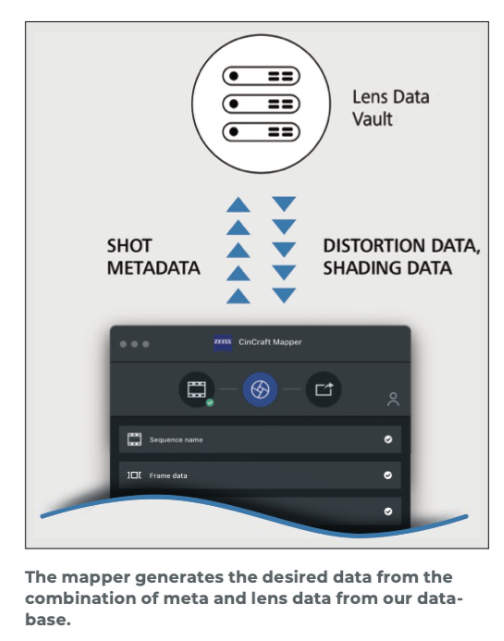

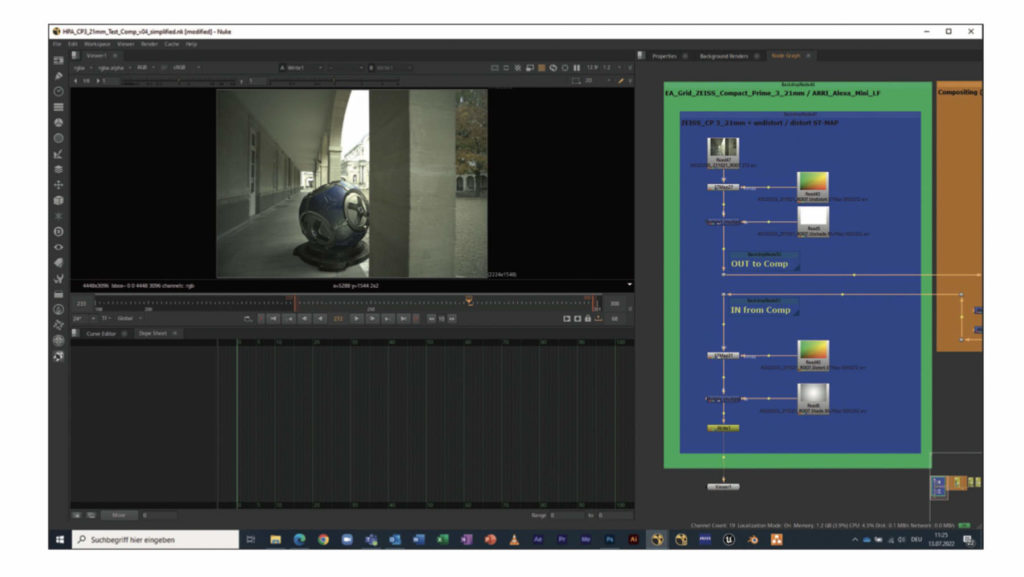

The mapper, on the other hand, works intuitively. Ultimately, everything happens in four simple steps: Import, Configure, Generate, Apply. First, the mapper needs certain data from the shot to be processed. The easiest way to get them is to drag and drop the shot into the client portal.

You can then check this data in the next step and correct or add to it if something is missing. When you confirm this, the mapper matches the entered metadata with our database, pulls the correct info for the corresponding lens, and calculates the cost from the combination. Once confirmed, the output data is generated locally on the computer and can then be used directly in compositing or match moving software.

DP: So, each frame is analyzed individually? And how do you see whether it worked out, for example when zooming in?

Marius Jerschke: The mapper looks at a certain part of the metadata. For each frame, these are the lens settings, that is, the changes in focus and aperture. In the second step, “Configure,” all key frames of the current shot can be displayed. The lens settings are displayed as graphs and numerical values. So you can see exactly where which setting was made during the shot.

As I said, these are currently graphs for the focus distance and the lens’s aperture. As soon as our zoom lenses are supported, there will be a third graph that maps the focal length changes.

These graphs are used to be able to check what exactly is going on in the metadata and, at the same time, the mapper uses this input to create frame- accurate data. You can actually enter the graph manually and then create frame- accurate data even if you don’t have all the necessary data from the shot.

DP: And what if the DIT has lost the metadata?

Marius Jerschke: If the lens data or lens grids are expected but not received, or the shot files are corrupt, then manual input comes into play. The data the mapper needs is pretty simple, you can get it almost off the top of your head. You need the camera model and format (the used sensor area), the lens model and the frame settings; in other words, focus distance, aperture and focal length.

For example, you might know from a note that the entire film was shot in 4K with a Sony Venice. Zeiss Supreme Primes were used as lenses. Then you’ll already have information on three of four inputs. It’s a bit trickier for the frame settings. You would need exact notes, so that you get frame-accurate maps. But if this is missing, you can roughly estimate the distance in the shot or find something useful on the clapperboard.

In that case, you don’t get frame- accurate data, but it’s still better than nothing. In a professional environment, however, this should not be the case. Metadata is vital for VFX!

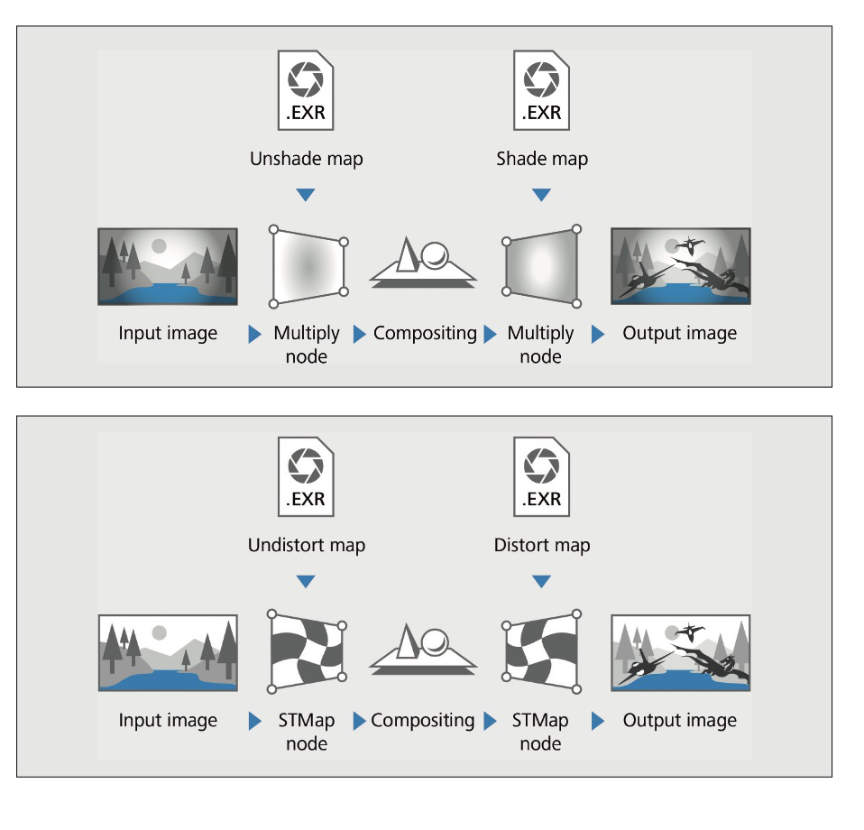

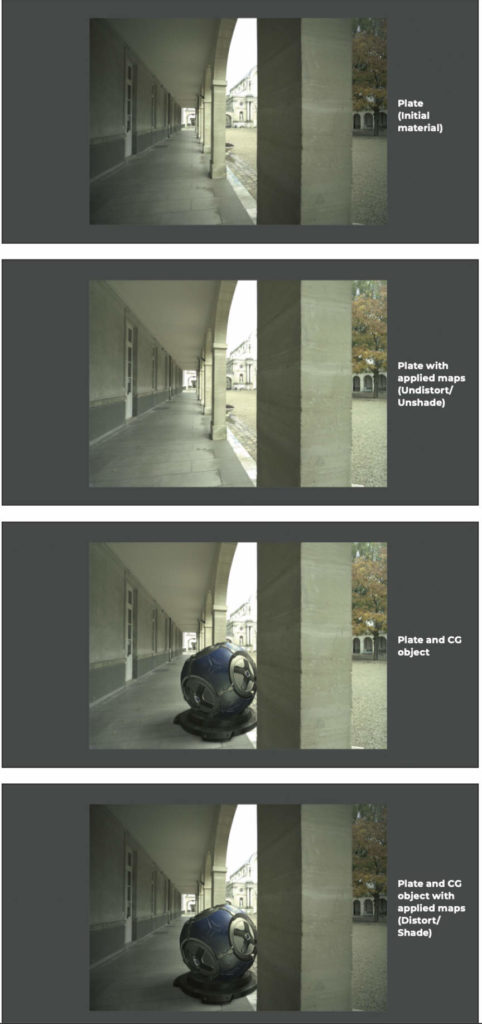

DP: And does it work the other way around to fit FullCG shots to plates? Marius Jerschke: The mapper generates maps to remove and add distortion, and maps to lighten and darken the vignetting. So the idea is that an artist first removes the distortion and lightens the vignetting from the captured footage. Then he/she puts the CGI on top of it and adds the distortion and vignetting back to the assembled image as a whole.

If a shot is only CGI, you can just use the maps for the latter step and give it the right lens look. Or if you want to make a completely fictitious shot, but want it to have special distortion and shading properties, you can output everything with the mapper.

DP: How long – approximately – does the analysis take per frame?

Marius Jerschke: Nothing really needs to be analyzed. The correct metadata is simply taken from the shot and then, in combination with the lens data stored with us, the corresponding distortion and shading data is generated for further processing. Pixel information is not considered at all. So in case that’s even a concern: importing a simple CSV file with the corresponding metadata is also sufficient for the mapper.

The mapper needs some time to generate the output data. This is very short per frame, the decisive factor is the number of lens settings, in other words, how often the focus

and the aperture were changed in the shot. The more settings there are, the longer it takes to generate the data.

We compared the time it takes the mapper to deliver the desired data with the working time for an artist’s lens grid analysis. So, for example, if an artist takes 30 minutes to do a lens grid or two, they end up with only two individual files for just those two focus distances of the lens grid shots. And these are then only the distortion data. The shading data is still missing. With the mapper, the artist takes a few minutes and gets frame-accurate data for every focus

distance, every aperture change, and every focal length change. And that’s just for distortion and shading.

DP: So I just load my shots in and out? And this works with all files?

Marius Jerschke: Currently, the Mapper supports a wide range of popular input formats. In the first version, we offer the customer the option of importing a shot as an image sequence in EXR format, as a CSVfileorintheformofaZLCF (Zeiss Lens Correction File) for the metadata extraction.

What’s important is that the customer can also combine multiple input formats of a shot during import to fill gaps in the metadata of a format.

Automatic metadata extraction works with the following input formats:

- Arri Raw Converter OpenEXR files

- Arri Meta Extract CSV files

- Red CineXPro OpenEXR files

- Sony Raw Viewer OpenEXR files

- Zeiss Lens Correction Files (ZLCF)

v2/v3

The combined import allows to fill in missing metadata by using two related metadata sources: - Arri Meta Extract CSV Files & Nuke

OpenEXR Files - Arri Meta Extract CSV Files & Arri

Raw Converter OpenEXR Files - Zeiss Lens Correction Files v2/v3 &

supported OpenEXR files

We are also working on supporting other formats all the way up to Raw. For lenses without electronic interface, the lens data must be recorded in the camera using a cmotion cPRO/cPRO PLUS Lens Encoder System with data license in order to be read from the EXR or CSV file after conversion with the supported tools. Here, too, we are trying to successively expand the support of lens encoder systems. The more projects the mapper can serve, the more studio pipelines we can make more efficient. CinCraft Mapper is currently available for Windows, MacOS, Linux, and as a command line interface (CLI) version for direct implementation into an existing pipeline.

For the Linux distribution, we focused on Ubuntu. The desired data is available throughout. Thus we enable a relaxed distortion and shading workflow.

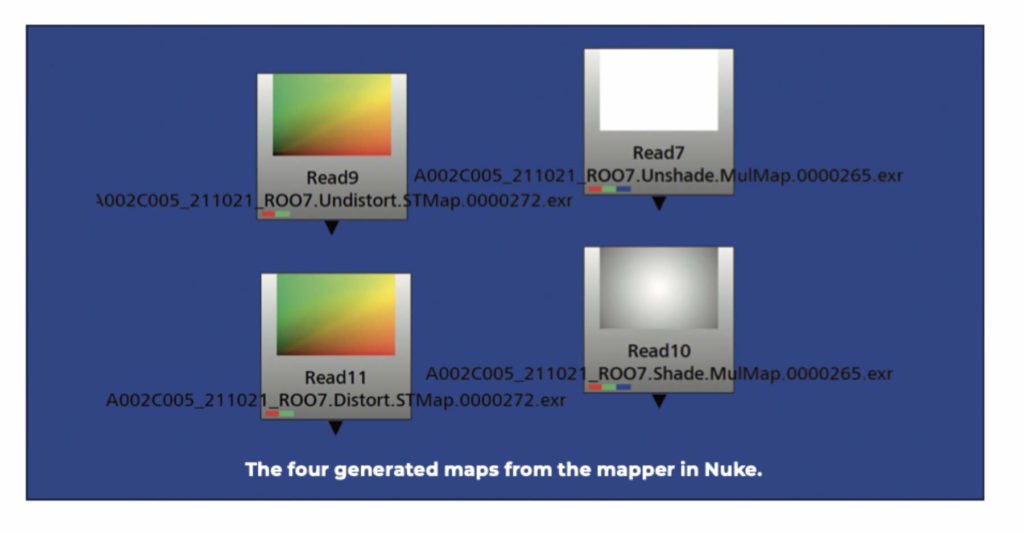

DP: And what do I get back from the mapper?

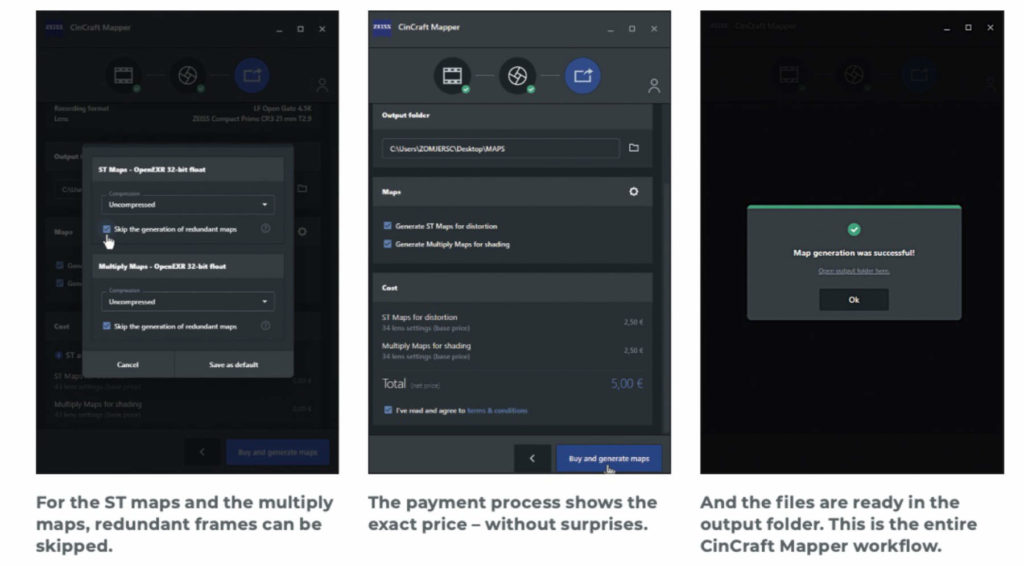

Marius Jerschke: The mapper generates files that can then be used directly in a variety of industry- standard software. These are ST Maps for Distortion and Multiply Maps for Shading. ST Maps are nothing more than a specific file format and the common standard when it comes to distortion. Multiply Maps is a neologism we created in the sense that these maps are simply applied via “multiply” image processing.

DP: And what workflows are supported so far?

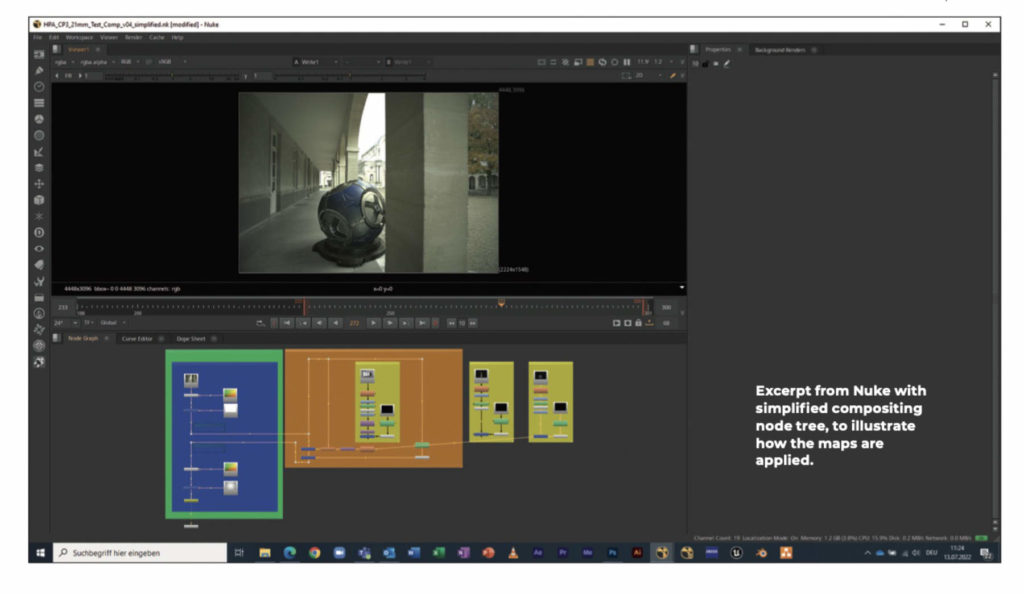

Marius Jerschke: We started by supporting the most common workflows and plan to expand that over time. We started with ST Maps and Multiply Maps because tools like Nuke, Fusion, Flame, 3DEqualizer, PF-Track and others are great at processing this data.

After Effects is currently not directly supported because it cannot handle ST Maps. However, we are aware that AE is used by many colleagues. Support for After Effects is therefore already in the works and will soon be available as an option in the mapper. As far as I know, this will also be the first professional distortion approach in After Effects.

To verify the whole thing, we used Nuke and other image processing software over and over again. We checked how the difference between our solution compared to the classic lens grid method. It turned out that the mapper actually maps the distortion slightly better. In combination with the frame accuracy, this is a great result.

DP: Let’s talk about the data: Who measures the lens distortion?

Marius Jerschke: Our optical designers simulate a lens during development before physical prototypes are built. In the process, rays are sent through the virtual lens, just as in the real world. It is thus a digital clone of a physical lens. This means that all the corresponding characteristics are available digitally.

It is precisely these data sets that we use for the mapper, in order to provide distortion and shading data.

The precision at Zeiss in manufacturing and precisely in this implementation of that simulation is so high that the tolerance in the deviation is so tiny that we can apply this data to an entire model series. In theory, we can make it specific according to the serial number, but our tests have shown that we don’t have to do that with our lenses.

Of course, this principle is only possible for unmodified lenses. However, we also have a small hint message in the mapper that deals exactly about this.

DP: You would think that with high precision there would be no more distortion?

Marius Jerschke: Our current spherical cine lenses actually already have very low distortion (older ones usually have more). Theoretically, it would be possible to

design completely distortion-free lenses, but the costs and the size would once again increase significantly. A compromise is therefore necessary between different physical boundary conditions, so some distortion is always there.

In contrast, if we talk about anamorphic lenses, everyone who has ever worked with them knows that a correct distortion and shading workflow is even more important here. So these lens properties are highly dependent on the lens and important when it comes to VFX.

DP: Shouldn’t the colleagues from Angenieux, Cooke and Sony also have such data?

Marius Jerschke: We definitely want to talk to other manufacturers so that their lenses are also available in CinCraft Mapper. That would make the service even more attractive for artists. Of course, we don’t know how exactly each manufacturer processes their data.

But in last case scenario, we also have the option of measuring and digitizing lenses, which is definitely what we would do in certain cases.

DP: And when we look behind the lens: So far, which cameras are even capable of recording this data?

Marius Jerschke: CinCraft is part of the cinematography division at Zeiss. So we started with the workflows of the major cinema camera manufacturers, such as Arri, Red and Sony. And we support pretty much all the models that are still in use. Beyond that, we’ll just have to see what the future brings.

DP: And if I’d like to use it: What does it cost?

Marius Jerschke: Here we have taken a different route than you might expect: there are no fixed subscription or license costs. We have developed a model where costs are only incurred if you actually use the mapper. And not everyone works every day with footage that is shot on Zeiss lenses.

Hence, the price is instead defined based on the number of shots and lens settings, that is, the change of focus and aperture in one shot. There is a base price per shot and a price per lens setting. If the costs for the lens settings exceed the base price, you pay the settings. Otherwise, you pay the base price.

Let’s take a shot with focus drive as an example, where the focus shifts from a person in the foreground to a person in the background. The focus is therefore changed continuously.

This can quickly add up to 50 lens settings. In this case, you would then pay for the settings, for example 50 x 0.15 EUR = 7.50 EUR lens setting costs as opposed to 5.00 EUR base costs.

We compared all the set prices with the time spent and the added value of the data for an artist. It was important to us that the price is always cheaper than the working time that the artist would otherwise have to spend on the lens-grid analysis.

And if a generated map gets lost, you don’t have to pay twice. The second time it simply costs nothing. Our price just gets more and more attractive.

DP: And can you try it out now?

Marius Jerschke: Yes, we have been live since early May. Just go to cincraft. zeiss.com/mapper and create an account, called a “Zeiss ID.” Now you can download and install the Client Portal and get started without any obligation. We have deposited a EUR 50 starting credit for each account, which is not time-sensitive.

And if you’re an artist and don’t have any shots to try out yourself, we also offer free test footage for download that was shot with various of our lenses. So everyone can find out if the mapper is something they’re interested in.

If you want to work with CinCraft Mapper permanently, you have to create a studio account, which

includes the necessary payment information.

In any case, I recommend anyone interested to take advantage of this testing opportunity. As Hugo Guerra always says, “You need to be better at VFX!” And with our new distortion and shading workflow, we’re helping you do just that.

DP: What does the future hold?

Marius Jerschke: Currently, our development team is working hard to implement zoom lenses and anamorphic lenses. Then the mapper will be able to present its full range of features soon.

Also, as already mentioned, there will be After Effects support and we are working on compatibility with more formats. That means users will end up with more power and will be able to use the mapper for all sorts of scenarios. So there’s a lot in store.

DP: With so much on the list, are there any other “digital cinematography” tools coming soon from Zeiss?

Marius Jerschke: Oh well, that’s still a secret (laughs) But yes, we are already working on other exciting projects. We are aware that people always want something new quickly. But developing reliable tools at the highest level simply takes time.

As I said, CinCraft Mapper is only the very first step and I am already excited and looking forward to everything that is to come. After all, I am still a VFX artist, too. ›